Table of Contents

Concurrency is a cornerstone of modern software development, enabling applications to perform multiple tasks simultaneously. With the rise of multi-core processors and the demand for high-performance computing, understanding and effectively managing concurrency has become essential for developers. This article delves deep into the world of virtual threads, a modern approach to concurrency, and explores strategies for embracing concurrency while avoiding the common pitfalls that can hinder performance and reliability.

Introduction to Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance

What Are Virtual Threads?

Virtual threads are lightweight threads managed by the runtime environment, such as the Java Virtual Machine (JVM), rather than by the operating system. They offer a more efficient way to handle concurrency, allowing developers to create and manage thousands or even millions of threads without the overhead typically associated with traditional threads. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

The Evolution of Concurrency in Software Development

Concurrency has evolved significantly over the years, from early single-threaded applications to complex, multi-threaded systems. As the need for responsive and scalable software grew, so did the methods for managing concurrent tasks. Traditional threading models, though powerful, often come with challenges such as resource contention, deadlocks, and race conditions. Virtual threads represent a new chapter in this evolution, promising to simplify concurrency management while enhancing performance. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Importance of Concurrency in Modern Applications

In today’s digital landscape, applications must handle multiple tasks simultaneously—processing user requests, managing background tasks, and interacting with various services—all in real-time. Concurrency allows these tasks to run in parallel, improving the responsiveness and scalability of applications. Whether it’s a web server handling numerous client connections or a mobile app performing background data synchronization, concurrency is key to delivering smooth and efficient user experiences. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Understanding Concurrency in Computing

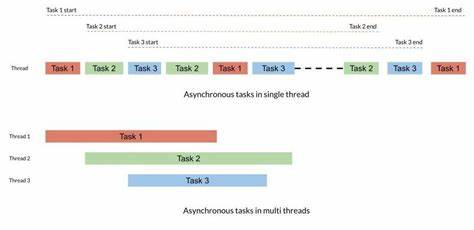

Basics of Concurrency: Processes vs. Threads

Concurrency in computing can be achieved through processes or threads. A process is an independent execution unit with its own memory space, while a thread is a lighter-weight unit of execution that shares the memory space of its parent process. Threads are more efficient in terms of resource usage compared to processes, making them a popular choice for concurrent programming. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Types of Concurrency Models

There are several concurrency models, including:

- Preemptive Multitasking: The operating system controls the allocation of CPU time to threads, interrupting and switching between them as needed.

- Cooperative Multitasking: Threads voluntarily yield control periodically, allowing other threads to execute.

- Event-Driven Concurrency: Uses events or messages to trigger thread execution, commonly seen in GUI applications and servers.

Benefits of Concurrency in Software Performance

Concurrency can significantly improve the performance and responsiveness of applications by enabling them to handle multiple tasks simultaneously. It allows for better CPU utilization, as idle time (such as waiting for I/O operations) can be used to execute other tasks. Additionally, concurrency can lead to faster completion of tasks and more responsive user interfaces, enhancing the overall user experience. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Virtual Threads Explained

Overview of Virtual Threads

Virtual threads are a modern approach to concurrency that decouples the number of threads from the number of available hardware threads (cores). Unlike traditional threads, which are managed by the operating system, virtual threads are managed by the JVM. This allows for the creation of a vast number of threads without the associated memory and context-switching overhead. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Virtual Threads vs. Traditional Threads

Traditional threads are typically heavyweight, with each thread consuming significant memory and requiring time-consuming context switches by the operating system. Virtual threads, on the other hand, are lightweight, with minimal memory usage and efficient context switching handled by the JVM. This makes virtual threads ideal for applications that require a high level of concurrency.

How Virtual Threads Operate within the JVM

Within the JVM, virtual threads are treated like any other object. They are created and managed by the JVM, which maps them to underlying kernel threads only when necessary (e.g., during blocking I/O operations). This on-demand mapping allows the JVM to efficiently manage thousands or even millions of virtual threads, optimizing resource usage and reducing the overhead typically associated with thread management. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Benefits of Virtual Threads

Enhanced Resource Management

Virtual threads consume significantly fewer resources compared to traditional threads. Since they are lightweight, they require less memory and reduce the overhead of context switching, freeing up system resources for other tasks.

Improved Scalability

Scalability is one of the most significant advantages of virtual threads. Traditional threads often limit the scalability of applications due to their resource-intensive nature. Virtual threads, however, allow developers to create and manage large numbers of concurrent tasks without worrying about hitting system limits. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Better CPU Utilization

By allowing a large number of threads to be executed concurrently, virtual threads improve CPU utilization. The JVM can efficiently schedule tasks across available cores, ensuring that the CPU is not left idle during I/O-bound operations. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Simplified Thread Management

Managing threads in a traditional concurrency model can be complex and error-prone, with developers needing to carefully balance the number of threads and resources. Virtual threads simplify this process, allowing for more straightforward code and reducing the likelihood of threading-related bugs. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Concurrency Challenges

Race Conditions

A race condition occurs when two or more threads access shared data simultaneously, leading to unpredictable results. Race conditions are notoriously difficult to detect and debug, often leading to subtle and intermittent bugs.

Deadlocks

Deadlocks happen when two or more threads are waiting indefinitely for each other to release resources, resulting in a standstill. Deadlocks can severely impact the performance and stability of an application. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Resource Starvation

Resource starvation occurs when a thread is perpetually denied access to necessary resources, usually due to poor scheduling or resource management, leading to degraded performance or even system failure. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Priority Inversion

Priority inversion is a situation where a lower-priority thread holds a resource needed by a higher-priority thread, leading to the higher-priority thread being indirectly blocked. This can result in unpredictable behavior and performance issues. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Common Pitfalls in Concurrency

Thread Safety Issues

Thread safety refers to the correct handling of shared data in a multi-threaded environment. Failing to ensure thread safety can lead to data corruption and unpredictable behavior. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Improper Locking Mechanisms

Locks are used to synchronize access to shared resources, but improper use of locks can lead to performance bottlenecks, deadlocks, and race conditions. Choosing the right locking strategy is crucial for maintaining application performance and correctness. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Inconsistent State

Inconsistent state occurs when shared data is modified by multiple threads in an unsynchronized manner, leading to corrupted data. Ensuring data consistency through proper synchronization is essential for avoiding this pitfall.

Overhead of Context Switching

Context switching, the process of saving and restoring the state of a thread when switching between threads, can be costly in terms of performance. Excessive context switching can lead to degraded performance, making it important to minimize the frequency of context switches. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Pitfall Avoidance Strategies

Best Practices for Thread Safety

Ensuring thread safety involves using synchronization mechanisms like locks, atomic operations, and thread-safe data structures. Developers should avoid using shared mutable state whenever possible and prefer immutability and stateless design patterns. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Efficient Locking Techniques

Efficient locking techniques include using fine-grained locks, lock-free data structures, and avoiding nested locks (which can lead to deadlocks). Developers should also consider using read-write locks where appropriate to allow concurrent reads while synchronizing writes. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Reducing Context Switching Overhead

To reduce the overhead of context switching, developers can use techniques such as batching tasks, reducing the number of threads, and employing thread pools that reuse existing threads instead of creating new ones for each task. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Proper Resource Allocation and Deallocation

Proper resource management involves allocating resources such as memory, file handles, and network connections only when needed and ensuring they are released promptly after use. This prevents resource leaks and helps avoid issues like resource starvation. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Programming Languages and Virtual Threads

Java and Project Loom

Project Loom is an initiative by the OpenJDK community to introduce virtual threads into the Java platform. It aims to simplify concurrent programming by allowing developers to write straightforward, blocking code without the scalability issues of traditional threads. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Virtual Threads in C++

While C++ does not have native support for virtual threads, there are libraries and frameworks that provide similar functionality, allowing developers to manage concurrency with lightweight threads. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Concurrency Models in Python

Python, known for its simplicity and readability, uses different concurrency models, including threading, multiprocessing, and asyncio for asynchronous programming. While it doesn’t natively support virtual threads, libraries like Greenlet offer similar capabilities.

Emerging Languages and Concurrency Innovation

New programming languages like Rust and Go have built-in support for modern concurrency models. Rust uses a borrow checker to prevent data races, while Go features goroutines, lightweight threads managed by the Go runtime, similar in concept to virtual threads.

Tools and Libraries for Managing Concurrency

Popular Concurrency Libraries

Libraries such as Akka (for actor-based concurrency), Netty (for asynchronous networking), and ForkJoinPool (for parallel processing) provide powerful tools for managing concurrency in applications.

Monitoring and Debugging Tools

Monitoring and debugging tools like VisualVM, JProfiler, and IntelliJ IDEA’s concurrency visualizer help developers identify and resolve concurrency issues, ensuring that their applications run smoothly. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Case Studies of Concurrency Tools in Action

Case studies of real-world applications using these tools illustrate how they can be effectively used to manage concurrency, improve performance, and avoid common pitfalls.

Best Practices in Concurrency Design

Architecting for Concurrency

When designing applications with concurrency in mind, it’s important to consider the architecture early on. This includes deciding on the appropriate concurrency model, choosing the right data structures, and ensuring that the design allows for scalability and maintainability. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Designing Scalable Systems

Scalable systems can handle increasing loads by efficiently managing resources and distributing tasks across multiple threads or processes. This involves designing systems that can scale horizontally and vertically as needed.

Code Reviews and Concurrency

Regular code reviews are essential for catching concurrency issues early. Reviewers should focus on potential threading issues, such as improper synchronization, deadlocks, and race conditions, ensuring that the code follows best practices for concurrency. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Testing for Concurrency Issues

Testing for concurrency issues requires specialized techniques, such as stress testing, load testing, and using tools that can simulate race conditions and deadlocks. Proper testing helps ensure that the application performs reliably under concurrent loads.“Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Performance Tuning in Concurrency

Profiling Multithreaded Applications

Profiling tools can help identify bottlenecks in multithreaded applications, such as excessive locking, thread contention, or inefficient use of resources. Profiling is a critical step in optimizing the performance of concurrent applications. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Optimizing Thread Pools

Thread pools allow for the reuse of threads, reducing the overhead of creating and destroying threads. Optimizing the size and configuration of thread pools can lead to significant performance improvements in concurrent applications. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Fine-Tuning Synchronization Primitives

Fine-tuning synchronization primitives, such as locks, semaphores, and barriers, can help reduce contention and improve performance. Developers should carefully choose and configure these primitives based on the specific needs of their application. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Avoiding Bottlenecks in Concurrency

Bottlenecks can occur when too many threads compete for the same resources. To avoid bottlenecks, developers should identify and eliminate hotspots in the code, optimize resource allocation, and ensure that tasks are evenly distributed across threads. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Security Considerations in Concurrency

Preventing Race Condition Exploits

Race conditions can be exploited by attackers to gain unauthorized access to data or cause unexpected behavior in an application. Developers should ensure that their code is free from race conditions and use secure coding practices to prevent such vulnerabilities. “Taming the Virtual Threads: Embracing Concurrency with Pitfall Avoidance”

Ensuring Data Integrity

Concurrency can lead to data integrity issues if not properly managed. Techniques such as atomic operations, transaction management, and consistency checks can help ensure that data remains accurate and consistent across concurrent operations.

Concurrency and Security Audits

Regular security audits should include checks for concurrency-related vulnerabilities, such as race conditions, deadlocks, and improper synchronization. Audits help identify and address potential security risks before they can be exploited.

Case Studies of Concurrency in Real-World Systems

High-Throughput Web Servers

Web servers that handle thousands of concurrent connections benefit significantly from virtual threads. By using virtual threads, these servers can manage a large number of connections efficiently, improving throughput and reducing latency.

Concurrent Processing in Gaming

In gaming, concurrency is essential for managing multiple game elements, such as AI, physics, and player interactions, simultaneously. Virtual threads allow for smoother gameplay and better resource management.

Financial Systems and Concurrency

Financial systems require high reliability and low latency, making concurrency crucial. Virtual threads can help these systems handle numerous transactions simultaneously while maintaining data integrity and performance.

Concurrency in Large-Scale Cloud Applications

Cloud applications often need to scale dynamically to handle varying loads. Virtual threads enable these applications to manage large numbers of concurrent users and processes, improving scalability and performance in a cloud environment.

Future of Concurrency and Virtual Threads

Trends in Concurrency Models

As software complexity increases, new concurrency models are emerging to address the challenges of managing parallelism. These models focus on simplifying the development process while improving performance and scalability.

Innovations in Virtual Thread Implementations

Ongoing research and development are leading to new implementations and optimizations of virtual threads, making them more efficient and easier to use. These innovations are expected to further enhance the adoption of virtual threads in software development.

The Role of AI in Managing Concurrency

Artificial intelligence is beginning to play a role in managing concurrency, with AI-driven tools that can automatically optimize thread management, detect concurrency issues, and suggest improvements to developers.

Predictions for Future Developments

The future of concurrency is likely to see continued advancements in virtual threads, with more programming languages and platforms adopting this approach. Additionally, the integration of AI and machine learning into concurrency management is expected to revolutionize how developers approach parallelism in their applications.

Conclusion

Recap of Key Points

This article explored the concept of virtual threads and their role in modern concurrency management. We discussed the benefits of virtual threads, common concurrency challenges, and strategies for avoiding pitfalls.

The Importance of Mastering Concurrency

Mastering concurrency is crucial for developing high-performance, scalable applications. By understanding and effectively managing concurrency, developers can build software that meets the demands of modern users and systems.

Encouragement to Embrace New Concurrency Tools and Techniques

As technology evolves, so too must our approaches to concurrency. Developers are encouraged to embrace new tools and techniques, such as virtual threads, to stay ahead of the curve and deliver robust, efficient applications.

FAQs

1. What are virtual threads, and how do they differ from traditional threads?

Virtual threads are lightweight threads managed by the JVM rather than the operating system, allowing for more efficient concurrency with less resource overhead compared to traditional threads.

2. What are the common pitfalls in concurrency management?

Common pitfalls include race conditions, deadlocks, resource starvation, and priority inversion. These issues can lead to unpredictable behavior and performance problems in concurrent applications.

3. How can I avoid deadlocks when using concurrency?

To avoid deadlocks, use proper locking mechanisms, avoid nested locks, and ensure that resources are acquired and released in a consistent order across threads.

4. What tools can help in debugging concurrency issues?

Tools like VisualVM, JProfiler, and concurrency visualizers in IDEs can help identify and resolve concurrency issues by providing insights into thread behavior and resource usage.

5. How do virtual threads improve scalability?

Virtual threads allow for the creation and management of a large number of threads with minimal overhead, enabling applications to scale efficiently to handle increased loads.

6. What is the future of concurrency in software development?

The future of concurrency will likely see further advancements in virtual threads, increased adoption of new concurrency models, and the integration of AI to enhance concurrency management.